We all know the importance of listening; of connecting with others by being present and authentic to deeply hear their thoughts, ideas, and feelings. We work hard at listening without judgment, carefully, with our full attention to connect and respect.

We all know the importance of listening; of connecting with others by being present and authentic to deeply hear their thoughts, ideas, and feelings. We work hard at listening without judgment, carefully, with our full attention to connect and respect.

But are we hearing them without bias? I contend we’re not. And it’s not our fault.

WHAT IS LISTENING?

From the work I’ve done unpacking how our brains make sense of incoming messages, I believe that listening is far more than hearing words and understanding another’s shared thoughts and feelings.

There are several problems with us accurately hearing what someone says, regardless of our intent to show up as empathetic listeners. Listening is actually a brain thing that has little do to with meaning: our brains determine what we hear. And they weren’t designed to be objective. There are two primary reasons:

- Words, considered meaningless puffs of air by neuroscientists, are meant to be semantic transmissions of meaning, yet emerge from our mouths smooshed together in a singular gush with no spaces between them.

- Our brains then decipher individual sounds, individual word breaks, unique definitions, to understand their meaning. No one speaks with spaces between words. Otherwise. It. Would. Sound. Like. This. Hearing impaired people face this problem with new cochlear implants: it takes about a year for them to learn to decipher individual words, where one word ends and the next begins. When others speak, their words enter our ears as meaningless electrochemical sound vibrations – puffs of air without denotation until our brain translates them, paving the way for misunderstanding.

- Due to the way sound vibrations are turned into electrochemical signals in our brains and uniquely translated according to historic neural circuits, our ears hear what we’ve heard before, not necessarily an accurate rendition of what a speaker intends to share.

Just as we perceive color when light receptors in our eyes send messages to our brain to translate the incoming light waves (the world has no color), meaning is a translation of sound vibrations that have traversed a very specific brain pathway after we hear them.

As such, I define listening as

our brain’s progression of making meaning from incoming sound vibrations – an automatic, electrochemical, biological, mechanical, and physiological process during which spoken words, as meaningless puffs of air, eventually get translated into meaning by our existing neural circuitry, leaving us to understand some unknown fraction of what’s been said – and even this is biased by our existing knowledge.

HOW BRAINS LISTEN

I didn’t start off with that definition. Like most people, I had thought that if I gave my undivided attention and listened ‘without judgment’, I’d be able to hear what a Speaker intended. But I was wrong.

When writing my book WHAT? on closing the gap between what’s said and what’s heard, I was quite dismayed to learn that what a Speaker says and what a Listener hears are often two different things.

It’s not for want of trying; Listeners work hard at empathetic listening. But the way our brains are organized make it difficult to hear others without bias.

Seems everything we perceive is translated (and restricted) by the circuits already in our brains. If you’ve ever heard a conversation and had a wholly different takeaway than others in the room, or understood something differently from the intent of the Speaker, it’s because brains have a purely mechanistic and historic approach to translating incoming content.

Here’s a simplified version of what happens when someone speaks:

– the sound of their words enter our ears as mere vibrations (meaningless puffs of air),

– and face dopamine, which distorts the incoming message/sound vibrations according to our beliefs.

– What’s left gets turned into electro-chemical signals (also meaningless) that

– get sent for translation to existing circuits, with

– a ‘close-enough’ match to historic circuits

– that then discard whatever doesn’t match

– causing us to ‘hear’ some unknown fragments of messages

– translated through circuits we already have on file (i.e. We translate incoming words through our historic circuits, making it almost impossible to accurately hear what’s been said)!

It’s mechanical. And it’s all biased by our own history, regardless of what a speaker says or intends. We hear some subjective version of what we already know.

The worst part is that during the process, when our brain discards signals that don’t match our history, it doesn’t tell us! So if you say “ABC” and the closest circuit match in my brain is “ABL” my brain discards D, E, F, G, etc. and fails to tell me what it threw away!

That’s why we believe what we ‘think’ we’ve heard is accurate. Our brain actually tells us that our biased rendition of what it thinks it heard is what was said, regardless of how near or far that interpretation is from the truth.

With the best will in the world, with the best empathetic listening, by being as non-judgmental as we know how to be, as careful to show up with undivided attention, just about everything we hear is naturally biased. [Note: to address this problem, I developed a unique training that first generates new neural circuits before offering new content so the brain will accurately understand, then retain, the new without bias.]

IT’S POSSIBLE TO GET IT ‘RIGHTER’

The problem is our automatic, mechanistic brain. Since we can’t easily change the process itself (I’ve been developing brain change models for decades; it’s possible to add new circuits.), it’s possible to interfere with the process.

I’ve come up with two ways to listen with more accuracy:

- When listening to someone speak, stand up and walk around, or lean far back in a chair. It’s a physiologic fix, offering an Observer/witness viewpoint that goes ‘beyond the brain’ and disconnects from normal brain circuitry. I get permission to do this even while I’m consulting at Board meetings with Fortune 100 companies. When I ask, “Do you mind if I walk around while listening so I can hear more accurately?” I’ve never been told no. They are happy to let me pace, and sometimes even do it themselves once they see me do it. I’m not sure why this works or how. But it does.

- To make sure you take away an accurate message of what’s said say this:

To make sure I understood what you said accurately, I’m going to tell you what I think you said. Can you please tell me what I misunderstood or missed? I don’t mind getting it wrong, but I want to make sure we’re on the same page.

Listening is a fundamental communication tool. It enables us to connect, collaborate, care, and relate with everyone. By going beyond Active Listening, by adding Brain Listening to empathetic listening, we can now make sure what we hear is actually what was intended.

______________________________

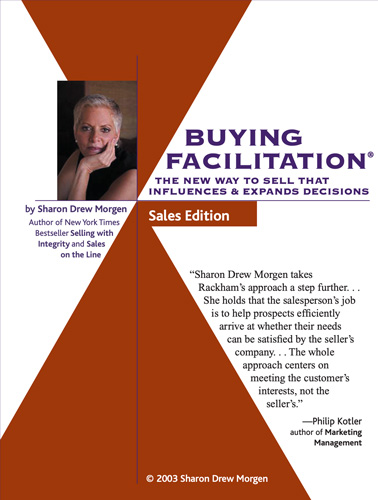

Sharon-Drew Morgen is a breakthrough innovator and original thinker, having developed new paradigms in sales (inventor Buying Facilitation®, listening/communication (What? Did you really say what I think I heard?), change management (The How of Change™), coaching, and leadership. She is the author of several books, including her new book HOW? Generating new neural circuits for learning, behavior change and decision making, the NYTimes Business Bestseller Selling with Integrity and Dirty Little Secrets: why buyers can’t buy and sellers can’t sell). Sharon-Drew coaches and consults with companies seeking out of the box remedies for congruent, servant-leader-based change in leadership, healthcare, and sales. Her award-winning blog carries original articles with new thinking, weekly. www.sharon-drew.com She can be reached at sharondrew@sharondrewmorgen.com.